20. Intro to using AI

Chapter Subtopics

Norms for the use of artificial intelligence are just now developing, and will continue evolving over time. Moreover, AI is itself rapidly developing, and specific guidance on its capabilities and limitations are likely to be quickly outdated. This chapter was written in July 2024, shortly after the public release of ChatGPT 4o, and reflects the state of generative AI as of the time of writing.

There’s no shortage of advice for how to best use generative AI, and this advice is sometimes superstitious, contradictory, and or otherwise questionable. While it’s impossible to provide the perfect formula for every situation, there are some general principles which seem to be helpful.

UNM’s Dean of College of University Libraries and Learning Sciences Leo Lo (2023) proposes using a “CLEAR” framework. Prompts should be:

- Concise: brevity and clarity in prompts

- Logical: structured and coherent prompts

- Explicit: clear output specifications

- Adaptive: flexibility and customization in prompts

- Reflective: continuous evaluation and improvement of prompts

Good prompts should be as short as possible while not leaving out necessary information. They should outline a logical sequence of steps, and have an explicit output. The prompter should adapt their prompts to their initial output, and allow the generative AI system to reflect on both the limits and success of their outputs.

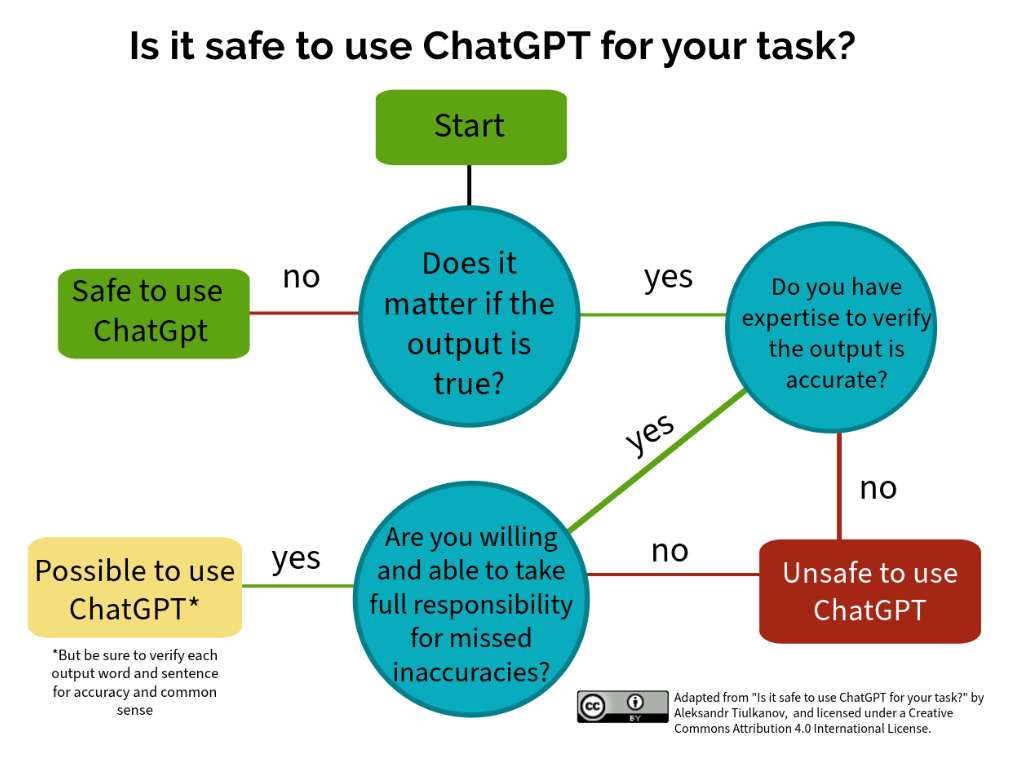

When integrating AI into any academic work, it’s crucial to recognize both its potential and its limitations regarding trustworthiness and truth. AI can be a powerful tool, but the nature of how it works, combined with the fact that it rarely is connected to a database of known facts means that it is not a reliably trustworthy source of true information.. One significant concern is the phenomenon known as “hallucinations,” where AI systems produce information that appears credible but is factually incorrect or entirely fabricated. These errors can stem from various factors, including biases in training data or the limitations of the algorithms themselves.

As educators and researchers, we must approach AI outputs with a critical eye. Always cross-reference AI-generated content with reliable sources to verify its accuracy. Fact-checking is an essential practice to maintain the integrity of our academic work and ensure that the information we disseminate is both trustworthy and truthful. By combining AI’s capabilities with rigorous scholarly standards, we can harness its benefits while safeguarding against misinformation.

At present, the US copyright office only grants copyright to original works by human creators. This means that natural phenomena, mathematical formulas, or works of art created by non-human animals cannot be copyrighted. Similarly, works created solely by artificial intelligence cannot be copyrighted.

The image on the left is a photograph created by a Celebes crested macaque playing with a camera, while the image on the right is a picture of a monkey taking a selfie generated by DALL-E, an AI Model. Neither image can be copyrighted

However, most actual “AI generated” works are really a collaboration between human and artificial intelligence, which can lead to complicated situations. For example, in 2023 the US Copyright office received an application for copyright on Zarya of the Dawn, a graphic novel with human written text and images created by the AI model Midjourney. The office eventually concluded that the text of the novel fell under copyright, but the images contained did not. It is likely that many even more complicated cases will arise in the future, and these will need to be evaluated on a case-by-case basis.

While major citation systems have begun to develop standards for citing AI, these citations can sometimes be lacking. For example, the current edition of the MLA handbook doesn’t require authors to cite the model version it their citations. Many authors have taken it on themselves to include a more complete acknowledgement of their use of AI. Typically, these acknowledgments include:

- A reference and link to the AI model used (e.g. ChatGPT 4o)

- The specific tasks or purposes the model was used for

- A final acknowledgement that the author takes responsibility for the work created

These conventions will continue to evolve.

Bias in AI is a multifaceted issue that arises primarily from biased training data and the inherent design of AI systems. AI systems are trained on data provided by humans, and these training sets inevitably contain both explicit and unconscious bias. When AI models are trained on such data, they can perpetuate and even amplify existing prejudices and societal inequalities. For instance, if an AI system is trained on a dataset with demographic imbalances, it might produce skewed results that unfairly disadvantage certain groups. In the broader world, this can lead to discriminatory outcomes in various applications, such as hiring practices, loan approvals, and even sentencing decisions.

To combat this, many AI models include “guardrails”, designed to prevent harmful behavior and ensure ethical usage. While important, these guardrails can also introduce unintended issues. They can sometimes lead to over-censorship or the suppression of valid but controversial viewpoints. Moreover, these guardrails can sometimes cause issues for scholars attempting to study and explain the history of bias and discrimination. Educators and researchers should be mindful of these guardrails’ existence, understanding their purpose while also critically evaluating their impact on the AI’s outputs.

Beyond the obviously inappropriate nature of presenting the outputs of AI models as original work, there are other more subtle inappropriate uses of AI. While AI can assist in many aspects of teaching, over-reliance or inappropriate use for tasks such as providing personalized feedback, classroom discussion, and even replies to emails can imply a lack of respect for the needs and individuality of students. Teaching is inherently a human endeavor that thrives on empathy, understanding, and the ability to adapt to the nuanced needs of individual students. The humanity of students must be respected, recognizing their unique perspectives, emotional states, and personal growth journeys. Similarly, the humanity of teachers is vital, as their passion, intuition, and personal investment in their students’ success cannot be replicated by algorithms. AI is a valuable tool only to the extent that it aids in connection, communication, and self-expression. Any use of AI that hinders these goals may be considered inappropriate.

License and Attribution

Intro to using AI was written by David Gustavsen, Jennifer Jordan, Jeffrey Houdek, Irina Meier, Xaver Neumeyer, Gina Rowe, Arianna Trott, and Justina Trott and is licensed under a Creative Commons Attribution 4.0 International License