3.6 Multimodal Perception

Though we have spent most of this chapter covering the senses individually, our real-world experience is most often multimodal, involving combinations of our senses into one perceptual experience. It should not shock you to find out that at some point, information from each of our senses becomes integrated into a unified representation of the world. Information from one sense has the potential to influence and interact with how we perceive information from another, a process called multimodal perception.

Interestingly, we can actually respond more strongly to multimodal stimuli compared to the sum of each single modality together, an effect called the superadditive effect of multisensory integration. This can explain how you are still able to understand what friends are saying to you at a loud concert, as long as you are able to get visual cues from watching them speak. If you were having a quiet conversation at a café, you likely wouldn’t need these additional cues. In fact, the principle of inverse effectiveness states that you are less likely to benefit from additional cues from other modalities if the initial unimodal stimulus is strong enough (Stein & Meredith, 1993).

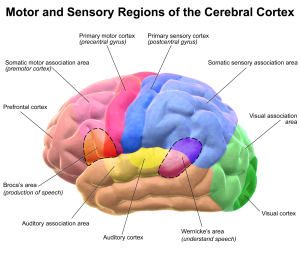

A surprisingly large number of brain regions in the midbrain and cerebral cortex are related to multimodal perception. These regions contain neurons that respond to stimuli from not just one, but multiple sensory modalities. For example, a region called the superior temporal sulcus contains single neurons that respond to both the visual and auditory components of speech (Calvert, 2001; Calvert, Hansen, Iversen, & Brammer, 2001). These multisensory convergence zones are interesting, because they are a kind of neural intersection of information coming from the different senses. That is, neurons that are devoted to the processing of one sense at a time— say vision or touch—send their information to the convergence zones, where it is processed together.

Multisensory neurons have also been observed outside of multisensory convergence zones, in areas of the brain that were once thought to be dedicated to the processing of a single modality (unimodal cortex). For example, the primary visual cortex was long thought to be devoted to the processing of exclusively visual information. The primary visual cortex is the first stop in the cortex for information arriving from the eyes, so it processes very low-level information like edges.

Interestingly, neurons have been found in the primary visual cortex that receives information from the primary auditory cortex (where sound information from the auditory pathway is processed) and from the superior temporal sulcus (a multisensory convergence zone mentioned above). This is remarkable because it indicates that the processing of visual information is, from a very early stage, influenced by auditory information. Such findings have caused some researchers to argue that the cortex should not be considered as being divided into isolated regions that process only one kind of sensory information. Rather, they propose that these areas only prefer to process information from specific modalities but engage in low-level multisensory processing whenever it is beneficial to the perceiver (Vasconcelos et al., 2011).

Multimodal Phenomena

Likely the most researched multimodal perception context is that of audiovisual integration. Many studies have looked at how we combine auditory and visual events in our environment into one coherent signal to be processed and interpreted. A common application for this is in the domain of speech perception, especially in environments where there is some ambiguity regarding the source or nature of a signal from one modality or the other. Early research on this examined the accuracy of recognizing spoken words presented in a noisy context, like trying to talk with a friend at a crowded party (Sumby & Pollack, 1954). To study this phenomenon experimentally, some irrelevant noise (“white noise”—which sounds like a radio tuned between stations) was presented to participants. Embedded in the white noise were spoken words, and the participants’ task was to identify the words. There were two conditions: one in which only the auditory component of the words was presented (the “auditory-alone” condition), and one in both the auditory and visual components were presented (the “audiovisual” condition). The noise levels were also varied, so that on some trials, the noise was very loud relative to the loudness of the words, and on other trials, the noise was very soft relative to the words. Sumby and Pollack (1954) found that the accuracy of identifying the spoken words was much higher for the audiovisual condition than it was in the auditory-alone condition. In addition, the pattern of results was consistent with the Principle of Inverse Effectiveness: The advantage gained by audiovisual presentation was highest when the auditory-alone condition performance was lowest (i.e., when the noise was loudest).

An interesting illusion born out of this research is known as the McGurk Effect (e.g., McGurk & MacDonald, 1976). This illusion takes place in some conditions when hearing a speaker make one sound (e.g., “ba”) but seeing their lips form the movements for a different sound (e.g., “ga”) – this can result in the auditory perception of a sound that was not present in either modality (e.g., “da”)! See an example of the McGurk Effect in the video below. The strength of this phenomenon can depend on factors like developmental age or fluency in a language.

We also find some similar influence of visual perception on auditory perception on the domain of music. Schutz and Lipscomb found that the visual of a percussionist striking an instrument influenced the perceived auditory duration of the note played, stating that “…while longer gestures do not make longer notes, longer gestures make longer sounding notes through the integration of sensory information.” (Schutz & Lipscomb, 2007).

In my own research, I found evidence suggesting that the visual information of a musician playing can also potentially influence the quality of the sound perceived; in this case, seeing a cellist use “vibrato” (vibrating the string being played with the left hand) influenced participants to indicate stronger vibrato sounds even when there was little or no vibrato present in the audio recording (Graham, 2017). Below, find an example stimulus video of the author, demonstrating a mismatched cello video (note the vibrato in the left hand) with cello audio (no vibrato).

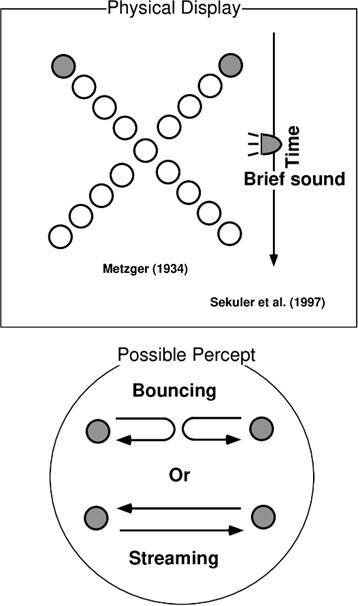

We also see that our perception of ambiguous visual events can be influenced by accompanying auditory events. Sekuler and colleagues (1997) – among others – have conducted studies in which balls appear to be moving across the screen toward each other and without more information, it is unclear what happens when their paths intersect. If an auditory event (e.g., a click, tick, etc.) occurs within a close-enough time window before or after they come into apparent contact, most participants perceive the balls as bouncing off each other. With no such auditory event, the perception is more often that the balls pass through or over/under each other and continue on their way. Note that the visual and auditory stimuli rely on common timing to be perceived as originating from the same event. This also extends to spatial relationships (often referred to as the spatial principle of multisensory integration): multisensory “enhancement” (e.g., a superadditive neuronal response) is observed when the sources of stimulation are spatially related to one another.

Finally, not all multisensory integration phenomena involve audio-visual crossmodal influence. One particularly compelling multisensory illusion involves the integration of tactile and visual information in the perception of body ownership. In the “rubber hand illusion” (Botvinick & Cohen, 1998), an observer is situated so that one of his hands is not visible. A fake rubber hand is placed near the obscured hand, but in a visible location. The experimenter then uses a light paintbrush to simultaneously stroke the obscured hand and the rubber hand in the same locations. For example, if the middle finger of the obscured hand is being brushed, then the middle finger of the rubber hand will also be brushed. This sets up a correspondence between the tactile sensations (coming from the obscured hand) and the visual sensations (of the rubber hand). After a short time (around 10 minutes), participants report feeling as though the rubber hand “belongs” to them; that is, that the rubber hand is a part of their body. This feeling can be so strong that surprising the participant by hitting the rubber hand with a hammer often leads to a reflexive withdrawing of the obscured hand—even though it is in no danger at all. It appears, then, that our awareness of our own bodies may be the result of multisensory integration. See an example of the rubber hand illusion below.

Synesthesia

Some individuals may experience multisensory perception even just from “unimodal” (or single sense) events. Synesthesia is a condition in which a sensory stimulus presented in one area (often called the Inducer) evokes a sensation in a different area (called the Concurrent experience). For example, an individual may experience a specific color for every given note (“C sharp is red”) or printed number or letter- is tinged with a specific hue (e.g., 5 is indigo and 7 is green). The specificity of the colors remains stable over time within any given individual, but the same note or letter doesn’t necessarily evoke the same color in different people. These experiences can sometimes enhance abilities (e.g., pitch memory given the additional associations with color), or they can impair performance on tasks when there are additional sensory cues that conflict with the “concurrent” experience. For example, if someone has a number-color association (e.g. 6 is always green), if we gave them a memory or reaction time task in which the digit 6 was presented in red color, this could interfere with their ability to respond or remember this item at a conscious level and require the use of more cognitive control to process the information.

The reasoning behind why synesthesia occurs is not well understood, but there are some prominent theories. One involves the idea that as we develop, after creating more neurons and connections between them than we need, our brain goes through regular periods of intentional “pruning” (apoptosis) where unused neurons and networks are destroyed. Then, the remaining networks are strengthened. This may be a process that allows our senses to become differentiated from each other. However, adult synesthesia may occur when the pruning process fails to run its course, and sensory connections initially intended to be temporary or transient become permanent.

Usually, we discuss diagnosable Synesthesia in terms of strong inducer-concurrent experience connections (these individuals are often referred to as “strong synesthetes”) but many people appreciate cross-modal associations without a strong synesthetic experience. For example, one study found that many synesthetes and non-synesthetes alike associate low pitch tones with darker colors and high pitch tones with lighter colors (Ward et al., 2006). Many people are also found to have associations of numbers, time, or musical notes with direction – left to right, or low to high in space (e.g., the perceived movement of time in many cultures correlates to writing direction). As such, it is possible that synesthesia exists on more of a “spectrum” and can be tied to our experiences with things like visual perception and language (e.g., writing direction) such that “strong synesthesia” is a somewhat natural extension of normal developmental processes.

Conclusion

Our impressive sensory abilities allow us to experience the most enjoyable and most miserable experiences, as well as everything in between. Our eyes, ears, nose, tongue and skin provide an interface for the brain to interact with the world around us. While there is simplicity in covering each sensory modality independently, it appears that our nervous system (and the cortex in particular) contains considerable architecture for processing multiple modalities as a unified experience. Given this neurobiological setup and the diversity of behavioral phenomena associated with multimodal stimuli, it is likely that the investigation of multimodal perception will continue to be a topic of interest in the field of experimental perception for many years to come.

The effects that concurrent stimulation in more than one sensory modality has on the perception of events and objects in the world.

The finding that responses to multimodal stimuli are typically greater than the sum of the independent responses to each unimodal component if it were presented on its own.

A kind of neural intersection of information coming from the different senses; neurons that are devoted to the processing of one sense at a time send their information to the convergence zones, where it is processed together.

Areas of the brain dedicated to the processing of a single modality.

A perceptual illusion (typically in the context of speech) that occurs when the auditory component of one sound is paired with the visual component of another sound, leading to the perception of a third sound.

A condition in which a sensory stimulus presented in one area (often called the Inducer) evokes a sensation in a different area (called the Concurrent experience).