3.5 Auditory Perception

As is the case with visual perception, our auditory perception of the world relies on much more than the vibrating air entering our ear canals. Auditory perception sometimes requires more nuanced (and automatic) calculations regarding volume and timing to draw conclusions about events in our environment. It can also vary based on expectations related to context and familiarity, again revealing our reliance on top-down perceptual processes. We will return to this in the Language chapter in more detail but I offer a few examples here.

Spatial Hearing

In contrast to vision, we have a 360° field of hearing. Our auditory acuity is, however, at least an order of magnitude poorer than vision in locating an object in space. Consequently, our auditory localization abilities are most useful in alerting us and allowing us to orient towards sources, with our visual sense generally providing the finer-grained analysis. Of course, there are differences between species, and some, such as barn owls and echolocating bats, have developed highly specialized sound localization systems.

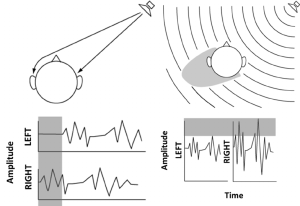

Our ability to locate sound sources in space is an impressive feat of neural computation. The two main sources of information both come from a comparison of the sounds at the two ears. The first is based on interaural time differences (ITD) and relies on the fact that a sound source on the left will generate sound that will reach the left ear slightly before it reaches the right ear. Although sound is much slower than light, its speed still means that the time of arrival differences between the two ears is a fraction of a millisecond. The largest ITD we encounter in the real world (when sounds are directly to the left or right of us) are only a little over half a millisecond. With some practice, humans can learn to detect an ITD of between 10 and 20 μs (i.e., 20 millionths of a second) (Klump & Eady, 1956).

The second source of information is based in interaural level differences (ILDs). At higher frequencies (higher than about 1 kHz), the head casts an acoustic “shadow,” so that when a sound is presented from the left, the sound level at the left ear is somewhat higher than the sound level at the right ear. At very high frequencies, the ILD can be as much as 20 dB, and we are sensitive to differences as small as 1 dB.

As mentioned briefly in the discussion of the outer ear, information regarding the elevation of a sound source, or whether it comes from in front or behind, is contained in high-frequency spectral details that result from the filtering effects of the pinnae.

In general, we are most sensitive to ITDs at low frequencies (below about 1.5 kHz). At higher frequencies we can still perceive changes in timing based on the slowly varying temporal envelope of the sound but not the temporal fine structure (Bernstein & Trahiotis, 2002; Smith, Delgutte, & Oxenham, 2002), perhaps because of a loss of neural phase-locking to the temporal fine structure at high frequencies. In contrast, ILDs are most useful at high frequencies, where the head shadow is greatest. This use of different acoustic cues in different frequency regions led to the classic and very early “duplex theory” of sound localization (Rayleigh, 1907). For everyday sounds with a broad frequency spectrum, it seems that our perception of spatial location is dominated by interaural time differences in the low-frequency temporal fine structure (Macpherson & Middlebrooks, 2002).

As with vision, our perception of distance depends to a large degree on context. If we hear someone shouting at a very low sound level, we infer that the shouter must be far away, based on our knowledge of the sound properties of shouting. In rooms and other enclosed locations, the reverberation can also provide information about distance: As a speaker moves further away, the direct sound level decreases but the sound level of the reverberation remains about the same; therefore, the ratio of direct-to-reverberant energy decreases (Zahorik & Wightman, 2001).

Auditory Scene Analysis

There is usually more than one sound source in the environment at any one time—imagine talking with a friend at a café, with some background music playing, the rattling of coffee mugs behind the counter, traffic outside, and a conversation going on at the table next to yours. All these sources produce sound waves that combine to form a single complex waveform at the eardrum, the shape of which may bear very little relationship to any of the waves produced by the individual sound sources. Somehow the auditory system is able to break down, or decompose, these complex waveforms and allow us to make sense of our acoustic environment by forming separate auditory “objects” or “streams,” which we can follow as the sounds unfold over time (Bregman, 1990).

A number of heuristic principles have been formulated to describe how sound elements are grouped to form a single object or segregated to form multiple objects. Many of these originate from the early ideas proposed in vision by the so-called Gestalt psychologists, such as Max Wertheimer. According to these rules of thumb, sounds that are in close proximity, in time or frequency, tend to be grouped together. Also, sounds that begin and end at the same time tend to form a single auditory object. Interestingly, spatial location is not always a strong or reliable grouping cue, perhaps because the location information from individual frequency components is often ambiguous due to the effects of reverberation. Several studies have looked into the relative importance of different cues by “trading off” one cue against another. In some cases, this has led to the discovery of interesting auditory illusions (like the one discussed below), where melodies that are not present in the sounds presented to either ear emerge in the perception (Deutsch, 1979).

Auditory Illusions

An interesting auditory illusion that highlights our tendency to organize or group sounds based on experience was put together by Diana Deutsch and colleagues (Deutsch, 1975). In this illusion, musical notes are played across stereo presentation such that when played together, it sounds ascending and descending scales are being played simultaneously, one in each ear. However, if you isolate each channel, you find that in each individual channel, the notes are actually jumping around. An example of this illusion is below and works best if you use headphones:

Note that with headphones, if both sides are playing at the same time, our brain tends to organize the sounds so it appears that one scale is played in one ear and the other scale is played in the other ear.

Another interesting auditory illusion identified by Diana Deutsch is referred to as the “Speech- to-song” illusion (Deutsch, 2019). Deutsch famously (in some circles anyway) stumbled across this illusion when recording spoken content for a CD and looped a passage during the editing process. She came back to it later believing that she was hearing singing, but it was the same passage again on repeat. In this case, we find that our perception of speech can change depending on context, and in this case, looping the speech repeatedly is enough to shift that context by providing a sense of rhythm and repetition. While there are speech events we consider categorically different from normal speech (e.g., rap or poetry), this illusion demonstrates how the same stimulus can shift from being perceived as one type of event to another. A video demonstration can be found below, and a more detailed explanation can be found at Deutsch’s website here. Note that if you go back and listen to the original sentence, for many listeners, it seems as though it starts off as speech, breaks into song, and then goes back into speech again.