4.2 Concepts and Models of Selective Attention

The Cocktail Party

Beyond just hearing your name from the clamor at a party, other words or concepts, particularly unusual or significant ones to you, can also snag your attention. Selective attention is the ability to select certain stimuli in the environment to process, while ignoring distracting information.

One way to get an intuitive sense of how attention works is to consider situations in which attention is used. A party provides an excellent example for our purposes. Many people may be milling around, there is a dazzling variety of colors and sounds and smells, the buzz of many conversations is striking. There are so many conversations going on; how is it possible to select just one and follow it? You don’t have to be looking at the person talking; you may be listening with great interest to some gossip while pretending not to hear. However, once you are engaged in conversation with someone, you quickly become aware that you cannot also listen to other conversations at the same time. You also are probably not aware of how tight your shoes feel or of the smell of a nearby flower arrangement.

On the other hand, if someone behind you mentions your name, you typically notice it immediately and may start attending to that (much more interesting) conversation. This situation highlights an interesting set of observations. We have an amazing ability to select and track one voice, visual object, etc., even when a million things are competing for our attention, but at the same time, we seem to be limited in how much we can attend to at one time, which in turn suggests that attention is crucial in selecting what is important. How does it all work?

Dichotic Listening Studies

This cocktail party scenario is the quintessential example of selective attention, and it is essentially what some early researchers tried to replicate under controlled laboratory conditions as a starting point for understanding the role of attention in perception (e.g., Cherry, 1953; Moray, 1959). In particular, they used dichotic listening and shadowing tasks to evaluate the selection process. Dichotic listening simply refers to the situation when two messages are presented simultaneously to an individual, with one message in each ear. In order to control which message the person attends to, the individual is asked to repeat back or “shadow” one of the messages as he hears it. For example, let’s say that a story about a camping trip is presented to John’s left ear, and a story about Abe Lincoln is presented to his right ear. The typical dichotic listening task would have John repeat the story presented to one ear as he hears it. Can he do that without being distracted by the information in the other ear?

People can become pretty good at the shadowing task, and they can easily report the content of the message that they attend to. But what happens to the ignored message? Typically, people can tell you if the ignored message was a man’s or a woman’s voice, or other physical characteristics of the speech, but they cannot tell you what the message was about. In fact, many studies have shown that people in a shadowing task were not aware of a change in the language of the message (e.g., from English to German; Cherry, 1953), and they didn’t even notice when the same word was repeated in the unattended ear more than 35 times (Moray, 1959)! Only the basic physical characteristics, such as the pitch of the unattended message, could be reported.

On the basis of these types of experiments, it seems that we can answer the first question about how much information we can attend to very easily: not very much. We clearly have a limited capacity for processing information for meaning, making the selection process all the more important. The question becomes: How does this selection process work?

Information-Processing and the Filter Model of Attention

Cognitive psychology is often called human information processing, which reflects the approach taken by many cognitive psychologists in studying cognition. The stage approach, with the acquisition, storage, retrieval, and use of information in a number of separate stages, was influenced by the computer metaphor and the way people enter, store, and retrieve data from a computer (Reed, 2000). The stages in an information-processing model are:

-

- Sensory Store: brief storage for information in its original sensory form

- Filter: part of attention in which some perceptual information is blocked out and not recognized, while other information is attended to and recognized

- Pattern Recognition: stage in which a stimulus is recognized

- Selection: stage that determines what information a person will try to remember

- Short-Term Memory: memory with limited capacity, that lasts for about 20-30 seconds without attending to its content

- Long-Term Memory: memory that has no capacity limit and lasts from minutes to a lifetime

Broadbent’s Filter Model (aka Early Selection Model)

Using an information-processing approach, Donald Broadbent collected data on attention and was one of the first researchers to try to characterize the information “selection” process across stages. He used a dichotic listening paradigm (see above section), asking participants to listen simultaneously to messages played in each ear, and based on the difficulty that participants had in listening to the simultaneous messages, proposed that a listener can attend to only one message at a time (Broadbent, 1952; Broadbent, 1954). More specifically, he asked enlisted men in England’s Royal Army to listen to three pairs of digits. One digit from each pair was presented to one ear at the same time that the other digit from the pair was presented to the other ear. The subjects were asked to recall the digits in whatever order they chose, and almost all of the correct reports involved recalling all of the digits presented to one ear, followed by all the digits presented to the other ear. A second group of participants were asked to recall the digits in the order they were presented (i.e., as pairs). Performance was worse than when they were able to recall all digits from one ear and then the other.

To account for these findings, Broadbent hypothesized that the mechanism of attention was controlled by two components: a selective device or filter located early in the nervous system, and a temporary buffer store that precedes the filter (Broadbent, 1958). He proposed that the filter was tuned to one channel or the other, in an all-or-nothing manner.

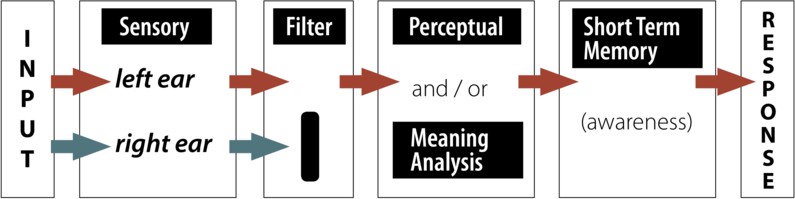

Broadbent found that people select information on the basis of physical features: the sensory channel (or ear) that a message was coming in, the pitch of the voice, the color or font of a visual message. People seemed vaguely aware of the physical features of the unattended information, but had no knowledge of the meaning. As a result, Broadbent argued that selection occurs very early, with no additional processing for the unselected information. A flowchart of the model might look like this:

Shortly after, it was discovered that if the unattended message became highly meaningful (for example, hearing one’s name as in Cherry’s Cocktail Party Effect, as mentioned above), then attention would switch automatically to the new message. Similarly, research conducted by J.R. Stroop (1935) and others indicates that in some situations, meaning is somewhat automatically processed such that it is difficult to ignore. In his now-famous task (and many variations), Stroop found that when participants were tasked with reading color words (e.g. red) that are written in different colored ink (e.g. blue), this only causes minor delays in reading time. However, when participants were asked to say the color of the ink and ignore the actual word, this caused much more substantial delays. This difference in processing speed for when the language and color information are mismatched (or “incongruent”) has come to be known as the Stroop Effect. This suggests that the meaning of the words is processed automatically and early on in our attentional process, requiring effort to ignore and attend to other information (e.g. the color of the ink). You can get a sense of this for yourself using the stimuli below ; if you are like most people, you should have a much more difficult time labeling the color of ink in which the words are written in the second sequence (mismatched, “incongruent” stimuli).

Such results led to the paradox that the meaning of a message can be understood before it is selected, indicating that Broadbent needed to revise his theory (Craik & Baddeley, 1995). Despite its inconsistencies with emerging findings, the filter model remains the first and most influential information-processing model of human cognition.

Anne Treisman and Feature Integration Theory

Anne Treisman is one of the most influential cognitive psychologists in the world today. For over four decades, she has been has using innovative research methods to define fundamental issues in the area of attention and perception. Best known for her Feature Integration Theory (1980, 1986), Treisman’s hypotheses about the mechanisms involved in information processing have formed a starting point for many theorists in this area of research.

In 1967, while Treisman worked as a visiting scientist in the psychology department at Bell Telephone Laboratories, she published an influential paper in Psychological Review that was central to the development of selective attention as a scientific field of study. This paper articulated many of the fundamental issues that continue to guide studies of attention to this day. In the years following, Treisman began to explore the notion that attention is involved in integrating separate features to form visual perceptual representations of objects. Using a stopwatch and her children as research participants, she found that the search for a red ‘X’ among red ‘Os’ and blue ‘Xs’ was slow and laborious compared to the search for either shape or color alone (Gazzaniga et al., 2002). These findings were corroborated by results from testing adult participants in the laboratory and provided the basis of a new research program, where Treisman conducted experiments exploring the relationships between feature integration, attention and object perception (Triesman & Gelade, 1980).

In 1980, Treisman and Gelade published a seminal paper proposing her enormously influential Feature Integration Theory (FIT). Treisman’s research demonstrated that during the early stages of object perception, early vision encodes features such as color, form, and orientation as separate entities (in “feature maps”) (Treisman, 1986). Focused attention to these features recombines the separate features resulting in correct object perception. In the absence of focused attention, these features can bind randomly to form illusory conjunctions (Treisman & Schmidt, 1982; Treisman, 1986). Feature integration theory has had an overarching impact both within and outside the area of psychology.

Feature Integration Theory Experiments

According to Treisman’s Feature Integration Theory perception of objects is divided into two stages:

- Pre-Attentive Stage: The first stage in perception is so named because it happens automatically, without effort or attention by the perceiver. In this stage, an object is analyzed into its features (i.e., color, texture, shapes etc.). Treisman suggests that the reason we are unaware of the breakdown of an object into its elementary features is that this analysis occurs early in the perceptual processes, before we have become conscious of the object. Evidence: Treisman created a display of four objects flanked by two black numbers. This display was flashed on a screen for one-fifth of a second and followed by a random dot masking field in order to eliminate residual perception of the stimuli. Participants were asked to report the numbers first, followed by what they saw at each of the four locations where the shapes had been. In 18 percent of trials, participants reported seeing objects that consisted of a combination of features from two different stimuli (i.e., color and shape). The combinations of features from different stimuli are called illusory conjunctions (Treisman and Schmidt, 1982). The experiment also showed that these illusory conjunctions could occur even if the stimuli differ greatly in shape and size. According to Treisman, illusory conjunctions occur because early in the perceptual process, features may exist independently of one another, and can therefore be incorrectly combined in laboratory settings when briefly flashed stimuli are followed by a masking field (Treisman, 1986).

- Focused Attention Stage: During this second stage of perception features are recombined to form whole objects. Evidence: Treisman repeated the illusory conjunction experiment, but this time, participants were instructed to ignore the flanking numbers, and to focus their attention on the four target objects. Results demonstrated that this focused attention eliminated illusory conjunctions, so that all shapes were paired with their correct colors (Treisman and Schmidt, 1982). The experiment demonstrates the role of attention in the correct perception of objects.

Treisman’s Attenuation Model

Broadbent’s “early-selection” filter model makes sense, but if you think about it you already know that it cannot account for all aspects of the Cocktail Party Effect. What doesn’t fit? The fact is that you tend to hear your own name when it is spoken by someone, even if you are deeply engaged in a conversation. We mentioned earlier that people in a shadowing experiment were unaware of a word in the unattended ear that was repeated many times—and yet many people noticed their own name in the unattended ear even it occurred only once.

Anne Treisman (1960) carried out a number of dichotic listening experiments in which she presented two different stories to the two ears. As usual, she asked people to shadow the message in one ear. As the stories progressed, however, she switched the stories to the opposite ears.

Treisman found that individuals spontaneously followed the story, or the content of the message, when it shifted from the left ear to the right ear. Then they realized they were shadowing the wrong ear and switched back.

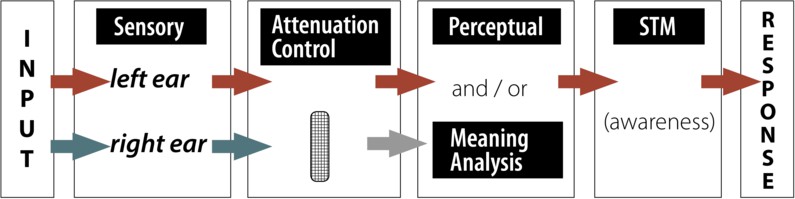

Results like this, and the fact that you tend to hear meaningful information even when you aren’t paying attention to it, suggest that we do monitor the unattended information to some degree on the basis of its meaning. Therefore, the filter theory can’t be right to suggest that unattended information is completely blocked at the sensory analysis level. Instead, Treisman suggested that selection starts at the physical or perceptual level, but that the unattended information is not blocked completely, it is just weakened or attenuated. As a result, highly meaningful or pertinent information in the unattended ear will get through the filter for further processing at the level of meaning. The figure below shows information going in both ears, and in this case there is no filter that completely blocks nonselected information. Instead, selection of the left ear information strengthens that material, while the nonselected information in the right ear is weakened. However, if the preliminary analysis shows that the nonselected information is especially pertinent or meaningful (such as your own name), then the Attenuation Control will instead strengthen the more meaningful information.

Late Selection Models

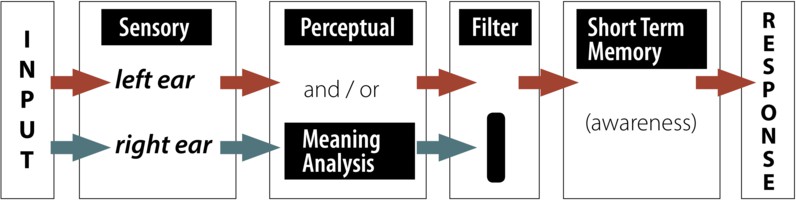

Other selective attention models have been proposed as well. A late selection or response selection model proposed by Deutsch and Deutsch (1963) suggests that all information in the unattended ear is processed on the basis of meaning, not just the selected or highly pertinent information. However, only the information that is relevant for the task response gets into conscious awareness. This model is consistent with ideas of subliminal perception; in other words, that you don’t have to be aware of or attending a message for it to be fully processed for meaning.

You might notice that this figure looks a lot like that of the Early Selection model—only the location of the selective filter has changed, with the assumption that analysis of meaning occurs before selection occurs, but only the selected information becomes conscious.

Multimode Model

Why did researchers keep coming up with different models? Because no model really seemed to account for all the data, some of which indicates that non-selected information is blocked completely, whereas other studies suggest that it can be processed for meaning. The multimode model addresses this apparent inconsistency, suggesting that the stage at which selection occurs can change depending on the task. Johnston and Heinz (1978) demonstrated that under some conditions, we can select what to attend to at a very early stage and we do not process the content of the unattended message very much at all. Analyzing physical information, such as attending to information based on whether it is a male or female voice, is relatively easy; it occurs automatically, rapidly, and doesn’t take much effort. Under the right conditions, we can select what to attend to on the basis of the meaning of the messages. However, the late selection option—processing the content of all messages before selection—is more difficult and requires more effort. The benefit, though, is that we have the flexibility to change how we deploy our attention depending upon what we are trying to accomplish, which is one of the greatest strengths of our cognitive system. For a short summary explanation regarding the various selection models, see the video below.

This discussion of selective attention has focused on experiments using auditory material, but the same principles hold for other perceptual systems as well. Neisser (1979) investigated some of the same questions with visual materials by superimposing two semi-transparent video clips and asking viewers to attend to just one series of actions. As with the auditory materials, viewers often were unaware of what went on in the other clearly visible video. Twenty years later, Simons and Chabris (1999) explored and expanded these findings using similar techniques, and triggered a flood of new work in an area referred to as inattentional blindness.

The process of receiving different auditory messages presented simultaneously to each ear.

A task in which a participant repeats aloud a message word-for-word at the same time that the message is being presented, often while other stimuli are presented in the background.

The failure to notice a fully visible, but unexpected, object or event when attention is devoted to something else.